Authors

- Jiyoung Lee✲, Yonsei University

- Soo-Whan Chung✲, Yonsei University, Naver Corporation

- Sunok Kim, Korea Aerospace University

- Hong-Goo Kang♱, Yonsei University

- Kwanghoon Sohn♱, Yonsei University

✲ equal contribution

♱ co-corresponding authors

Abstract

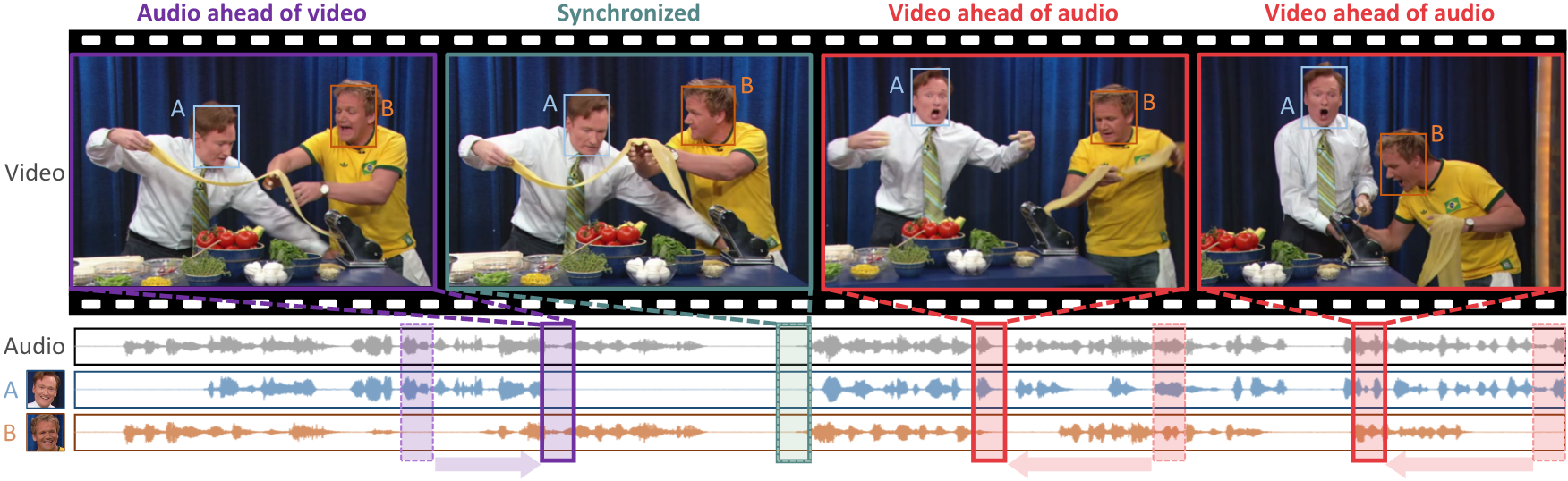

In this paper, we address the problem of separating individual speech signals from videos using audio-visual neural processing. Most conventional approaches utilize frame-wise matching criteria to extract shared information between co-occurring audio and video. Thus, their performance heavily depends on the accuracy of audio-visual synchronization and the effectiveness of their representations. To overcome the frame discontinuity problem between two modalities due to transmission delay mismatch or jitter, we propose a cross-modal affinity network (CaffNet) that learns global correspondence as well as locally-varying affinities between audio and visual streams. Given that the global term provides stability over a temporal sequence at the utterance-level, this resolves the label permutation problem characterized by inconsistent assignments. By extending the proposed cross-modal affinity on the complex network, we further improve the separation performance in the complex spectral domain. Experimental results verify that the proposed methods outperform conventional ones on various datasets, demonstrating their advantages in real-world scenarios.

Network Configuration

Overall network configuration: (1) encoding individual audio and visual features; (2) learning cross-modal affinity; (3) predicting spectrogram soft mask $\mathbf{M}$ to reconstruct target speech $\mathbf{\hat{Y}}$. Red dotted region means the magnitude operation processing.

Demo Samples

Paper

|

J. Lee*, S.-W. Chung*, S. Kim, H.-G. Kang, K. Sohn Looking into Your Speech: Learning Cross-modal Affinity for Audio-visual Speech Separation [Paper] [Code(Available soon)] |

BibTeX

@inproceedings{lee2021looking,

title={Looking into Your Speech: Learning Cross-modal Affinity for Audio-visual Speech Separation},

author={Lee, Jiyoung and Chung, Soo-Whan and Kim, Sunok and Kang, Hong-Goo and Sohn, Kwanghoon},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2021}

}